Type out a few words — then, like magic, the image is transformed.

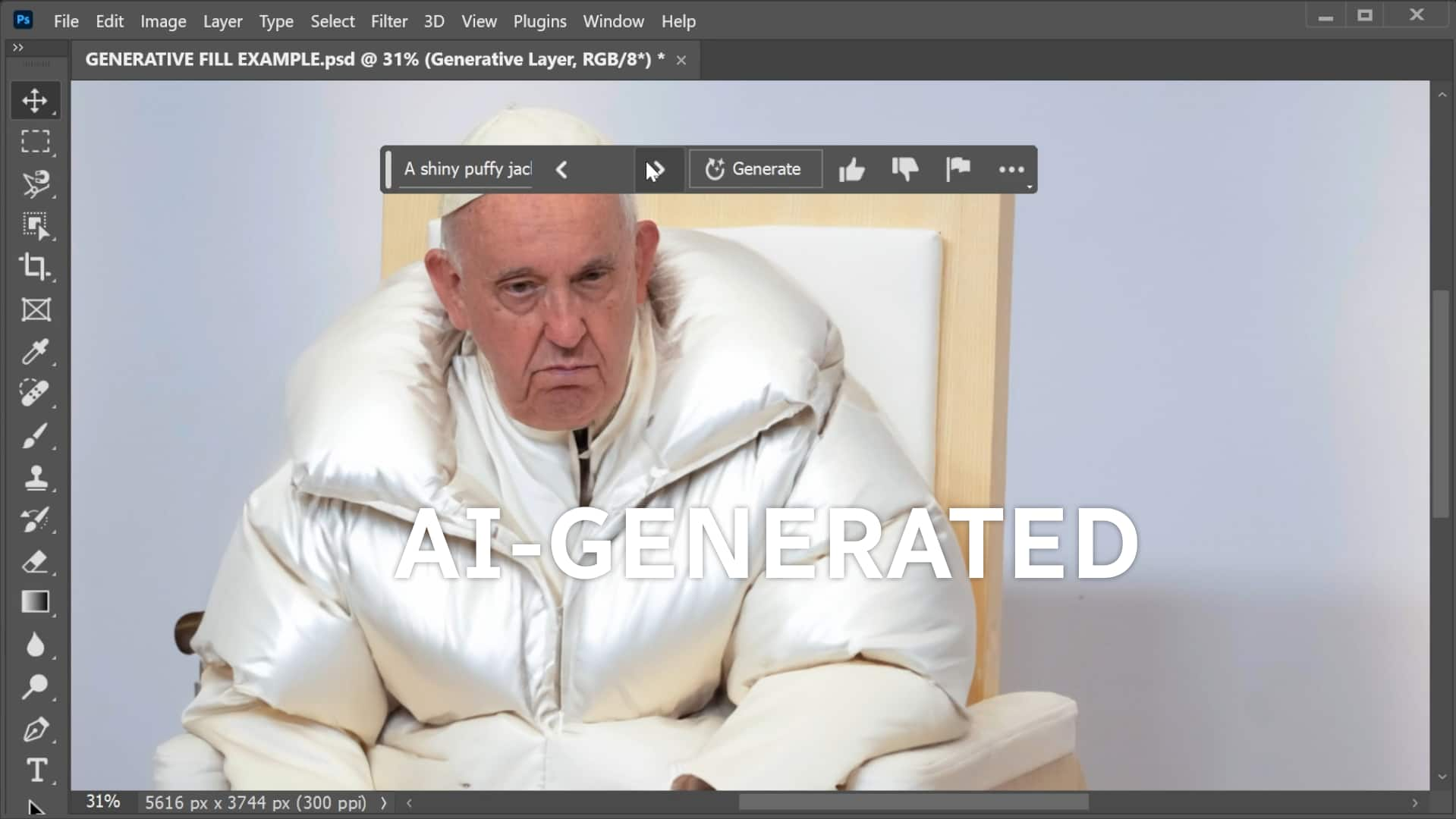

With the new version of Photoshop out last week, users are able to easily manipulate or add to an image in seconds, simply by giving the program a prompt. The beta release of Adobe’s new “Generative Fill” feature comes on the heels of several other advances in image generation software in the last year.

The feature is expected to be made available for wide release in the second half of 2023, which means we can expect to be inundated with more and more manufactured images (Adobe has an estimated tens of millions of creative product users).

“We’re moving into a world where you and I can no longer believe that if we see a picture of the Pope wearing a puffer jacket and walking outside the Vatican, that it’s actually him,” said Maura Grossman, a research professor in the school of computer science at the University of Waterloo who has been studying the real-world implications of AI-generated images.

In March, an image of the Pope was created with another program, Midjourney, and posted to Reddit. It was shared widely online, with many initially believing it was real, illustrating both the power and peril of such technology.

With the new Photoshop feature, CBC News was able to make another version of the Pope in a puffer jacket in less than a minute.

CBC News put Pope Francis in a puffer jacket in less than a minute using Photoshop’s new artificial-intelligence feature.

In testing out the feature, CBC was able to quickly create several others — some with far greater potential consequences. A protest crowd shot, for instance, was easily made much larger within seconds. CBC created these images to demonstrate how quick and easy it is to do, and did not save the images for future use.

Risks and rewards

The technology has exciting possibilities for those in creative fields (though there are hiccups at this early stage and some of the images clearly look doctored).

But it will also make it more and more difficult to distinguish between what’s real and what isn’t.

Just last week, a doctored image of the Pentagon exploding into flames caused the U.S stock market to briefly dip after it was picked up by several international outlets.

Users of Photoshop’s AI feature are encouraged to use what they call “content credentials.” Adobe described the credentials as a “nutrition label” for images, designed to make it clear whether a piece of content is AI-generated or AI-edited.

Currently, that feature is optional — and easily removed.

“There’ll be many bad actors who’ll be able to get around that,” said Grossman.

WATCH | Times we were tricked by AI:

Andrew Chang breaks down the consequences of faking high-profile photos after a couple of recent AI images went viral: the Pope in a puffer coat and former U.S. president Donald Trump getting arrested.

The implications are potentially far-reaching, she said.

Grossman, who is also an adjunct professor at Osgoode Hall Law School, recently published a journal article on the potential effects of doctored images and other generative AI on the court system.

“I think in terms of creating new drugs and some of the stuff that will be able to be done in health and efficiency in business — fabulous — but in other areas there are real risks.”

Underlying issues

But another expert, Felix Simon, argued a single advance in image generation technology is not a major cause for concern.

“Photoshop, for instance, has been around for ages, and so have other freely accessible and fairly easy-to-use photo manipulation tools,” said Simon, a communication researcher and doctoral scholar at the Oxford Internet Institute.

“As far as I can remember we haven’t seen ‘truth’ collapse in on our heads — or at least not thanks to these tools and the things you can do with them.”

The bigger issue, in his view, is the underlying reasons why people create and share false information in the first place — and how they can be best addressed.

“A lot of the misinformation and disinformation we see already out on the internet or offline and other contexts is not even necessary to the kind of material that is particularly sophisticated,” he said.

“People still get fooled, for instance, by fairly easy manipulations.”

Going forward, he said, there will need to be a greater emphasis on improved regulations around this technology and, at an individual level, getting information from trusted sources.

“I think that’s the most important thing you can do … as an individual and as part of the broader public.”