Researchers have developed a robot capable of performing surgical procedures with the same skill as human doctors by training it using videos of surgeries.

The team from Johns Hopkins and Stanford Universities harnessed imitation learning, a technique that allowed the robot to learn from a vast archive of surgical videos, eliminating the need for programming each move. This approach marks a significant step towards autonomous robotic surgeries, potentially reducing medical errors and increasing precision in operations.

Revolutionary Robot Training

A robot has, for the first time, learned to perform surgery by watching videos of experienced surgeons, achieving a skill level comparable to human doctors.

This breakthrough in imitation learning for surgical robots means that robots no longer need to be programmed with each individual movement required in a medical procedure. Instead, they can learn by observing, bringing robotic surgery closer to full autonomy—where robots might one day carry out complex surgeries without human help.

Breakthrough in Surgical Robotics

“It’s really magical to have this model and all we do is feed it camera input and it can predict the robotic movements needed for surgery,” said Axel Krieger, the study’s senior author. “We believe this marks a significant step forward toward a new frontier in medical robotics.”

The Johns Hopkins University-led findings are being showcased this week at the Conference on Robot Learning in Munich, a premier event in robotics and machine learning.

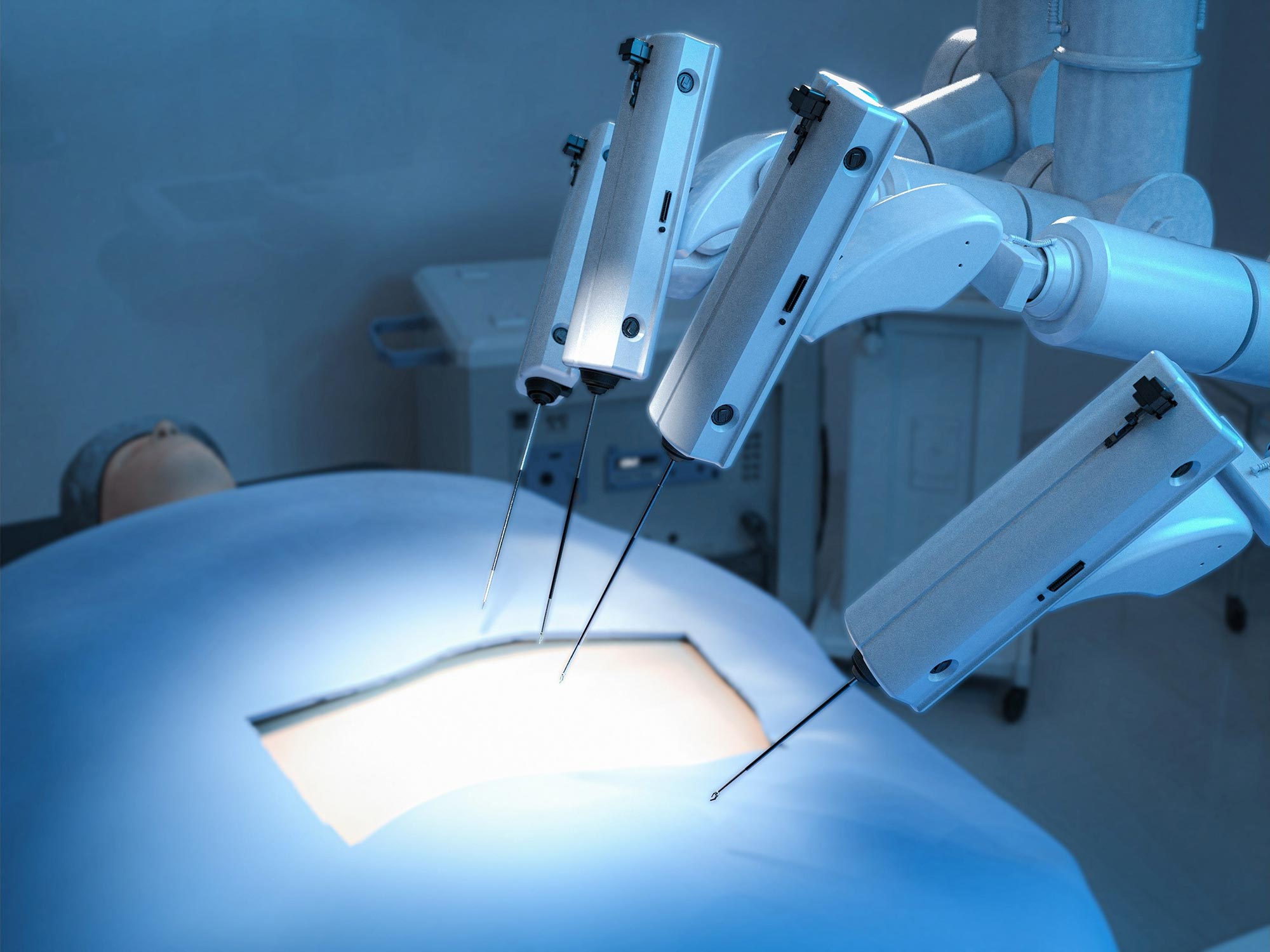

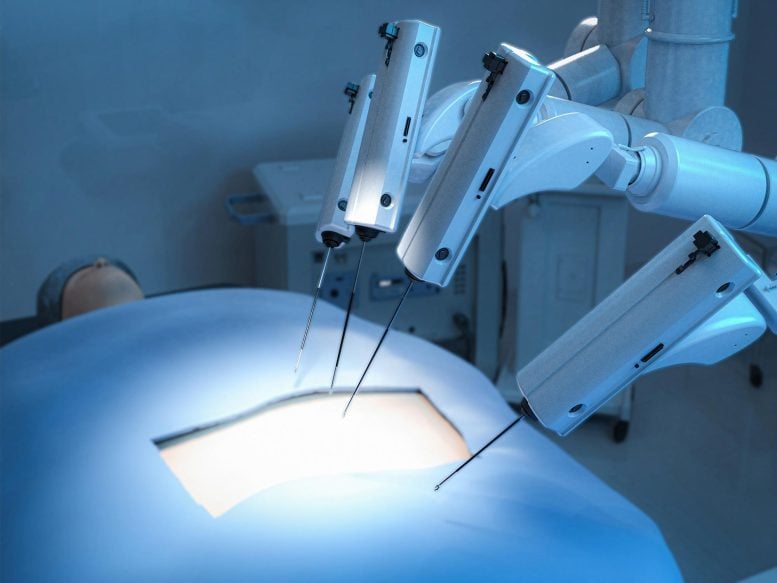

The robot demonstrates several fundamental surgery procedures. Credit: Johns Hopkins University

Enhanced Learning Through Video

The research team, including Stanford University collaborators, used imitation learning to train the da Vinci Surgical System robot in core surgical tasks: needle manipulation, tissue lifting, and suturing. This model combines imitation learning with the advanced machine learning architecture behind ChatGPT. Unlike ChatGPT, which operates with language, this robotic model uses kinematics—a mathematical language that translates robotic motion into precise angles and movements.

The researchers fed their model hundreds of videos recorded from wrist cameras placed on the arms of da Vinci robots during surgical procedures. These videos, recorded by surgeons all over the world, are used for post-operative analysis and then archived. Nearly 7,000 da Vinci robots are used worldwide, and more than 50,000 surgeons are trained on the system, creating a large archive of data for robots to “imitate.”

Achieving Precision and Autonomy

While the da Vinci system is widely used, researchers say it’s notoriously imprecise. However, the team found a way to make the flawed input work. The key was training the model to perform relative movements rather than absolute actions, which are inaccurate.

“All we need is image input and then this AI system finds the right action,” said lead author Ji Woong “Brian” Kim. “We find that even with a few hundred demos the model is able to learn the procedure and generalize new environments it hasn’t encountered.”

The team trained the robot to perform three tasks: manipulate a needle, lift body tissue, and suture. In each case, the robot trained on the team’s model performed the same surgical procedures as skillfully as human doctors.

“Here the model is so good at learning things we haven’t taught it,” Krieger said. “Like if it drops the needle, it will automatically pick it up and continue. This isn’t something I taught it to do.”

Future Prospects for Medical Robotics

The model could be used to quickly train a robot to perform any type of surgical procedure, the researchers said. The team is now using imitation learning to train a robot to perform not just small surgical tasks but a full surgery.

Before this advancement, programming a robot to perform even a simple aspect of a surgery required hand-coding every step. Someone might spend a decade trying to model suturing, Krieger said. And that’s suturing for just one type of surgery.

“It’s very limiting,” Krieger said. “What is new here is we only have to collect imitation learning of different procedures, and we can train a robot to learn it in a couple of days. It allows us to accelerate to the goal of autonomy while reducing medical errors and achieving more accurate surgery.”

Meeting: Conference on Robot Learning

Authors from Johns Hopkins include PhD student Samuel Schmidgall; Associate Research Engineer Anton Deguet; and Associate Professor of Mechanical Engineering Marin Kobilarov. Stanford University authors are PhD student Tony Z. Zhao

Funding: U.S. National Science Foundation, Advanced Research Projects Agency for Health