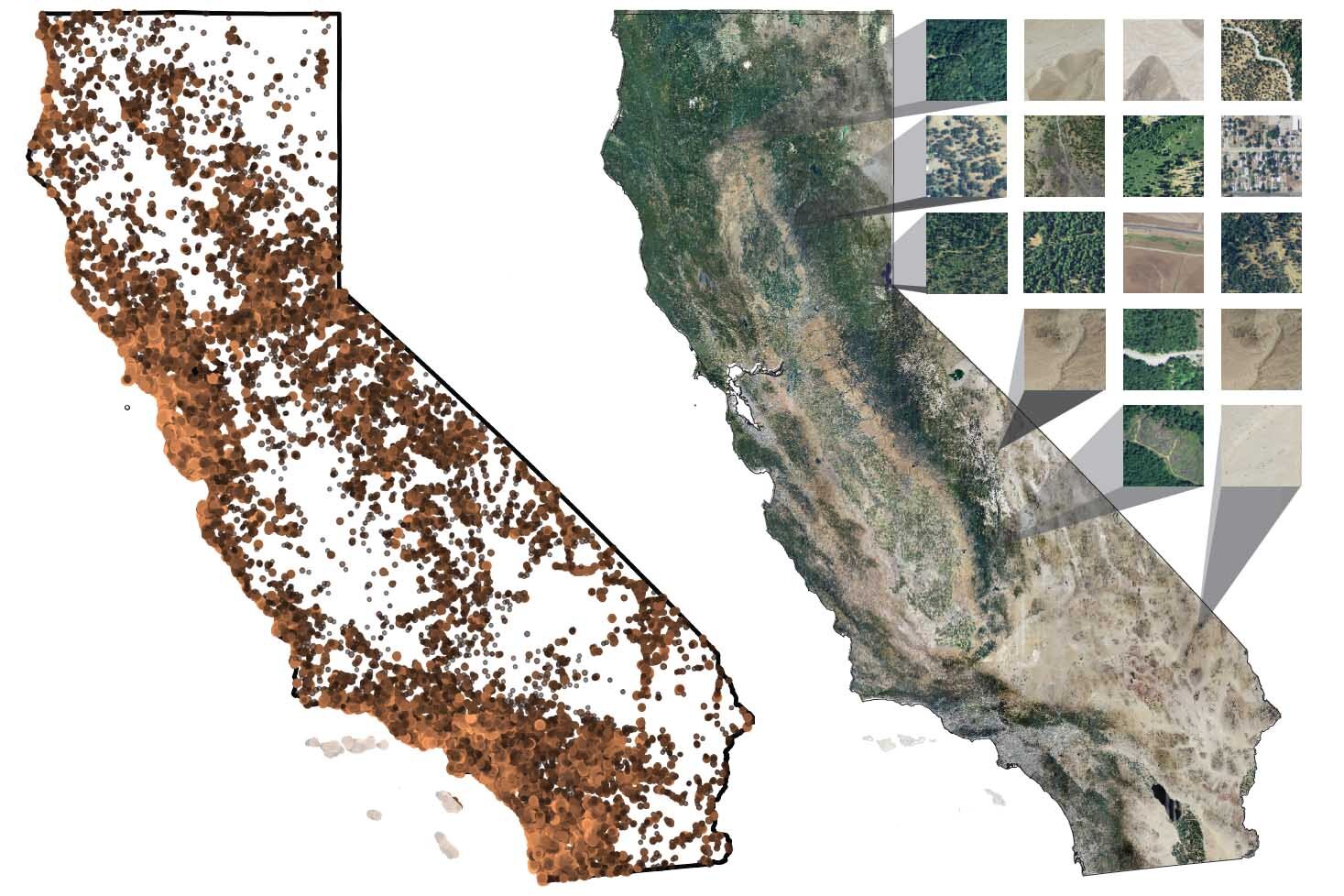

Utilizing advanced artificial intelligence and citizen science data from the iNaturalist app, researchers at the University of California, Berkeley, have developed some of the most detailed maps yet showcasing the distribution of California plant species.

iNaturalist is a widely-used cellphone app, originally developed by UC Berkeley students, that allows people to upload photos and the location data of plants, animals or any other life they encounter and then crowdsource their identity. The app currently has more than 8 million users worldwide who collectively have uploaded more than 200 million observations.

The researchers used a type of artificial intelligence called a convolutional neural network, which is a deep learning model, to correlate the citizen science data for plants in California with high-resolution remote-sensing satellite or airplane images of the state. The network discovered correlations that were then used to predict the current range of 2,221 plant species throughout California, down to scales of a few square meters.

Botanists usually build high-quality maps of species by painstakingly listing all plant species in an area, but this is not feasible outside of a few small natural areas or national parks. Instead, the AI model, called Deepbiosphere, leverages free data from iNaturalist and remote sensing airplanes or satellites that now cover the entire globe. Given enough observations by citizen scientists, the model could be deployed in countries lacking detailed scientific data on plant distributions and habitats to monitor vegetation change, such as deforestation or regrowth after wildfires.

The findings were published Sept. 5 in the journal Proceedings of the National Academy of Sciences by Moisés “Moi” Expósito-Alonso, a UC Berkeley assistant professor of integrative biology, first author Lauren Gillespie, a doctoral student in computer science at Stanford University, and their colleagues. Gillespie currently has a Fulbright U.S. Student Program grant to use similar techniques to detect patterns of plant biodiversity in Brazil.

“During my year here in Brazil, we’ve seen the worst drought on record and one of the worst fire seasons on record,” Gillespie said. “Remote sensing data so far has been able to tell us where these fires have happened or where the drought is worst, and with the help of deep learning approaches like Deepbiosphere, soon it will tell us what’s happening to individual species on the ground.”

“That is a goal—to expand it to many places,” Expósito-Alonso said. “Almost everybody in the world has smartphones now, so maybe people will start taking pictures of natural habitats and this will be able to be done globally. At some point, this is going to allow us to have layers in Google Maps showing where all the species are, so we can protect them. That’s our dream.”

Apart from being free and covering most of Earth, remote sensing data are also more fine-grained and more frequently updated than other information sources, such as regional climate maps, which often have a resolution of a few kilometers. Using citizen science data with remote sensing images—just the basic infrared maps that provide only a picture and the temperature—could allow daily monitoring of landscape changes that are hard to track.

Such monitoring can help conservationists discover hotspots of change or pinpoint species-rich areas in need of protection.

“With remote sensing, almost every few days there are new pictures of Earth with 1 meter resolution,” Expósito-Alonso said. “These now allow us to potentially track in real time shifts in distributions of plants, shifts in distributions of ecosystems. If people are deforesting remote places in the Amazon, now they cannot get away with it—it gets flagged through this prediction network.”

Expósito-Alonso, who moved from Stanford to UC Berkeley earlier this year, is an evolutionary biologist interested in how plants evolve genetically to adapt to climate change.

“I felt an urge to have a scalable method to know where plants are and how they’re shifting,” he said. “We already know that they’re trying to migrate to cooler areas, that they’re trying to adapt to the environment that they’re facing now. The core part of our lab is understanding those shifts and those impacts and whether plants will evolve to adapt.”

In the study, the researchers tested Deepbiosphere by excluding some iNaturalist data from the AI training set and then later asking the AI model to predict the plants in the excluded area. The AI model had an accuracy of 89% in identifying the presence of species, compared to 27% for previous methods. They also pitted it against other models developed to predict where plants are growing around California and how they will migrate with rising temperatures and changing rainfall. One of these models is Maxent, developed at the American Museum of Natural History, that uses climate grids and georeferenced plant data. Deepbiosphere performed significantly better than Maxent.

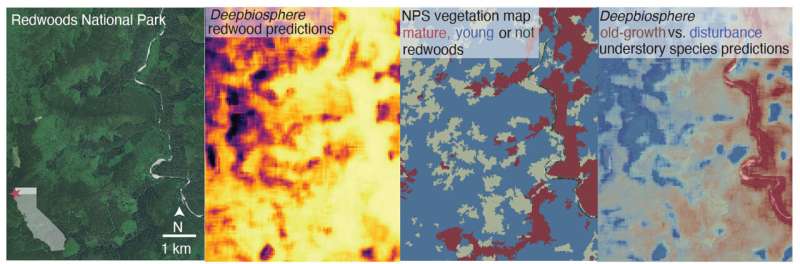

They also tested Deepbiosphere against detailed plant maps created for some of the state’s parks. It predicted with 81.4% accuracy the location of redwoods in Redwood National Park in Northern California and accurately captured (with R2=0.53) the burn severity caused by the 2013 Rim Fire in Yosemite National Park.

“What was incredible about this model that Lauren came up with is that you are just training it with publicly available data that people keep uploading with their phones, but you can extract enough information to be able to create nicely defined maps at high resolution,” Expósito-Alonso said. “The next question, once we understand the geographic impacts, is, “Are plants going to adapt?'”

Megan Ruffley, also of the Carnegie Institution for Science at Stanford, is a co-author of the paper.

More information:

Lauren E. Gillespie et al, Deep learning models map rapid plant species changes from citizen science and remote sensing data, Proceedings of the National Academy of Sciences (2024). DOI: 10.1073/pnas.2318296121

Provided by

University of California – Berkeley

Citation:

AI empowers iNaturalist to map California plants with unprecedented precision (2024, October 12)

retrieved 12 October 2024

from https://phys.org/news/2024-10-ai-empowers-inaturalist-california-unprecedented.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.