why social media turned toxic and how we can fix it

Bill Ready is the chief executive of Pinterest, the image-sharing platform known for its creative and quirky user base, whose photos of home decor, food, weddings and more comprise what the company calls the “best ideas . . . for all of life’s moments”.

When Ready took the role in June 2022, he walked into a period of upheaval for tech stocks: an advertising slump, macroeconomic turmoil, and sector job cuts. At the same time, social media companies were battling a wave of criticism from politicians and the public, who felt that they prioritised profit over safety, hooking users with algorithmically curated content curation and online popularity contests — all while harvesting their data.

Today, those fears have only intensified as platforms such as Elon Musk’s X have cut back on their moderation policies and resources, citing the imperative of free speech.

But Ready — previously an entrepreneur, PayPal’s chief operating officer and president of commerce at Google — claims social media can be a kinder place. In conversation with the FT’s technology correspondent, Hannah Murphy, he talks about his vision for a less toxic platform that can use artificial intelligence (AI) for good, but still grow, make money, and satisfy activist investors.

Hannah Murphy: When Pinterest first went public, I described it in the FT as the world’s most wholesome social media platform. Do you think that’s accurate? Does Pinterest still intend to be this wholesome place?

Bill Ready: Absolutely, yes. In fact, that’s one of the biggest things that attracted me to Pinterest when I joined. One of the things that I really want to do here is to prove a different business model for social media, one built on positivity.

While that was an attribute of the platform previously, we’re really doubling down on that, because we want to give consumers a real choice in where they spend their time. Social media has been delivering engagement where content that triggers you — things that will get you to keep watching — rises to the top. We’re taking a very different approach, where we consciously choose to tune our AI for positivity, to show things that will help people feel better, feel more uplifted, take more real-world action in their life.

HM: Is that the answer, the tuning of the AI? How do you rid your platform of toxicity, then?

BR: Yes, it is. This question of tuning AI is central to how social media became so toxic. Think about how your own social media world has evolved over the past decade. When social media started out, it was a chronological view of what your friends posted, right?

HM: Yes.

BR: And, over time, it more and more became a view of what the algorithms thought you should see. The AI was told to maximise your view time, and it figured out that the things that would make you watch the longest were the ones that triggered you the most — whether it was the politician that really got you fired up, or whether it was things that made you covet somebody else’s fake perfect life.

As human beings, we’re still wired the same way we were 100,000 years ago, when your brain was given a choice. Which thing should you pay more attention to? The thing that could be a nice lunch, or the thing that could make you [its] lunch? And, obviously, the thing that could make you [its] lunch required more of your attention. Things that drive fear, anger, envy, greed will grab the base of your brainstem, and the AI figured that out.

The way that we’re approaching creating a more positive place on the internet is twofold. It’s, one, tuning the AI specifically for positivity — not just for maximising view time, but also for maximising emotional wellbeing, for making people feel better, not worse. And, two, part of what we think is imperative is mixing in more conscious choice from users.

What we found on our platform, when it was starting to incorporate more short-form video, was that [it was] maximising for view time. And what was rising to the top? The same triggering, made-you-look content that you would see on other platforms.

And we said, OK, how do we make sure that we’re tuning for positivity? One of the things that we found was that, if we set the AI to tune more for explicit signals — things like saves, or click to purchase, or what users were actually choosing to go look at — all of a sudden, users would choose those in terms of outcomes. Instead of triggering content rising to the top, it was self-help and do-it-yourself videos, and things that were related to people’s passions and interests — whether fashion, or beauty, or music.

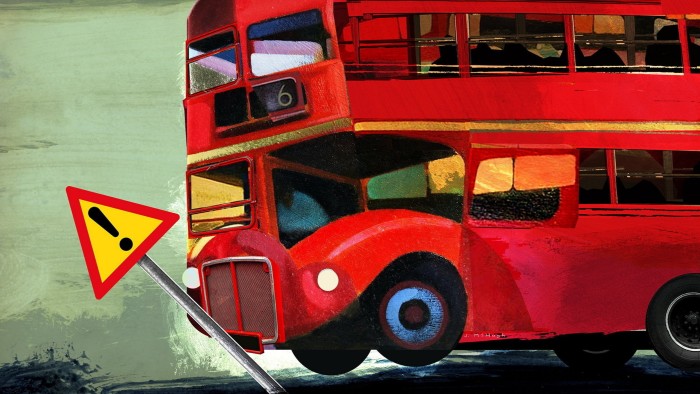

The metaphor I use for this is that it’s like driving down the road and approaching a car crash up ahead. We all know you shouldn’t look, right? But everybody peeks. With algorithmically derived feeds, the AI says: “You looked, [so] I should show you another car crash. And you looked at that one, too. [So] I should show you another car crash. You looked at that one, too . . . ” Until, eventually, your feed is filled with nothing but car crashes. That is what has happened with social media.

But, if you ask somebody after they saw the car crash, “You want to see another one of those?” The vast majority of people will say, “Goodness, no, that was terrible.”

HM: The reason platforms have evolved to encourage toxicity is because it’s effective: it delivers more time for showing adverts, and we like these grabby things. So how convincing is a business model based on positivity? Is it that you attract a different audience? Or is it that, if people aren’t led down the rabbit hole, they won’t choose to go down it, and they’ll just generally be happy, and you can lead the way?

BR: Yes, that’s it. The great thing is we are already seeing this cut through, with both consumers and advertisers.

Gen Z has become the largest, fastest-growing demographic on our platform, and one of the key reasons Gen Z cites is that it sees Pinterest as an oasis away from the toxicity of the rest of social media.

And advertisers are becoming more aware that so much of what’s driving the view time on a lot of social media is negative content, and are increasingly looking for brand-safe environments. The vast majority of brands are built on positivity, and so we’re seeing a more positive platform resonate with advertisers.

The last thing I’d say on this is that we are a much more action-oriented platform than others. In most of social media, it’s entertainment-based, using a lean-back consumption mode. On Pinterest, people come with intent and purpose, in a lean-forward mode, whether it’s to make or to do or to buy.

HM: Obviously, you’ve had Elon Musk’s X going in quite a different direction, with Musk suggesting that free speech and moderation are at odds with one another. Is free speech a consideration that comes into play for you, or not so much because of the format of the platform?

BR: Social media has really started to consume all of media. And a big thing that has shifted is that, even 10 years ago, 15 years ago, consumers made explicit choices in the media they were going to consume. If somebody wanted to turn on a 24-hour news channel and listen to people shout about politics, or wanted to put on family-friendly content, they could make an explicit choice to do that.

If social media becomes the vast majority of your media consumption, you have lost that choice. On most platforms, your only choice is to swipe, to continue scrolling.

In the same way that, in the prior media world, people could choose what channel they tuned into, we want to bring that choice to social media . . . [When they say] I want to go to a place that helps me invest in myself . . . I want to go to a place where I don’t have to perform for others and try to show off a fake perfect life, we want them to know that Pinterest is that place.

And, to be that place, it means we consciously don’t bring politics into the platform. For example, we don’t allow political advertisements. We’re making real business choices to say, yes, we don’t want that revenue, we think there’s different revenue that we’d rather have.

HM: How is that working with advertisers?

BR: Back at Cannes Lions [an annual festival for the advertising and communications industries, held in June], we launched the Inspired Internet Pledge, in which there were two things that we were doing.

One of them was saying that social media has to drive more change. There’s been all this talk of tactics — but, at the end of the day, do people feel better or worse after they spend time on your platform? That’s what we called on the social media industry to do, and we said, “Hey, we will raise our hands and go first — we are going to measure emotional wellbeing outcomes, and we are committed to better outcomes over time.”

The second thing we did is, we called on the advertisers to vote with their dollars — and, by the way, not just vote for us. Hold us accountable; hold the whole industry accountable for more positivity. And I think there’s good signs of progress: you do see more and more advertisers being quite conscious of where they spend their dollars. Advertisers need to be more accountable than they have been because, if you look at much of the toxicity of social media, advertisers continue to fund a lot of these platforms.

HM: At the moment, there’s a lot of bipartisan concern about teen wellbeing and child safety. How are you addressing those fears? Surely the hunt to get young users hooked on a platform as soon as possible, as part of running a profitable business, is at odds with caring for their wellbeing?

BR: Yes, these things are at odds — until consumers and parents have an alternative. We’ve seen this play out over and over again. To draw a parallel from another industry, think back to [US consumer advocate] Ralph Nader’s Unsafe at Any Speed [a 1965 book accusing carmakers of neglecting safety].

You had most of the auto industry advocating that safety belts were against the business model, right? [But then] not only did you have regulatory and public outcry, you also started to have car companies [taking action]: Volvo comes along and invents the three-point safety belt, then starts to build a brand and differentiation on safety. All of a sudden, consumers had choices and, now, every auto manufacturer competes on safety. I hope that can be a future state for social media.

HM: You want to be the Volvo of socials?

BR: Yes, it only takes one to change an industry, right? I hope not only that we can differentiate ourselves on it, but that safety can start to become an aspect that the industry competes on.

To your point of business model, I do think this is a place where we see platforms making very different choices. If you take teen safety, it’s always the case that there’s going to be bad actors that try to exploit platforms. Then the question is, what do you do to combat it?

On the teen safety front, earlier this year, we moved to private-by-default for users under 18. For users under 16, it is private-only — not just private-by-default. Of course, that took away some engagement and revenue in the short term. But we were willing to make that trade because we felt that, in the long term, not only was it morally the right thing to do [but we can] build the business on safety — it can be a real differentiator for the business.

HM: You mentioned the ecommerce side earlier. Many of your rivals — Instagram, Snap — pushed deeper during the pandemic into ecommerce with mixed results, and have pulled back somewhat. It feels like western social media hasn’t quite nailed shopping properly — is it fair to say that?

BR: Yes, I think that’s fair to say across social media, broadly. This was one of the things that I found so compelling about the opportunity to join Pinterest.

Part of why social media has really struggled to solve for shopping is that it’s exceptionally difficult, darn near impossible, to change the mode that a user’s in: to get the user to go from lean-back to lean-forward. One of the things that I found really compelling about Pinterest is that the user is in that lean-forward mode. More than half the people on Pinterest say they are there to shop.

The thing that needed to be solved for Pinterest was that, in a lot of ways, Pinterest had solved digital window shopping, but all the stores were closed: you couldn’t take action on the things that you would find. And as we’ve been bringing that actionability on to the platform, we’ve been seeing that work really well with users, whether you measure that by clicks or conversions.

HM: Inevitably, I’d like to ask about AI. How have you taken advantage of recent advances?

BR: AI is a core competency for us. We have made phenomenal progress over the past 18 months, leveraging next-gen AI on multiple fronts, including pairing it with the human curation that happens on our platform — people making product associations and saying this handbag goes with this dress and these shoes. That becomes a really rich signal for the AI to know better and better what’s going to be a fashionable outfit for the user.

I’ll give you a few tangible examples. When we recommend something and go, ‘We think you’d like this, or we think you’d like that’, we’re now getting a 95 per cent relevancy score with users. That’s up a full 10 per cent in the last year, and that’s really been based on next-gen AI. Our large language models that we’re using, our relevancy models, are now more than 100 times larger than they were just a year ago.

Another place where we’ve made progress with AI is on our ad platform, where we’re getting to the ads being more and more relevant for the users.

And the last example I’d cite, and, in fact, the most important one, is trust and safety. One of the things I’m most proud of is that, over the past 18 months, we’ve proven that it is possible to tune the AI for positivity, to lead to better emotional wellbeing.

There’s a bunch of other elements within that. [On body type] we’re helping to show a more representative sample of what the real population actually looks like in our feed, so people don’t get so locked into the idea that there’s only one ideal of perfection. We’ve seen that with our skin tone diversity, too.

And we’ve made tremendous progress over multiple years on using AI to detect harmful content and to remove it. We’re also seeing that we can move beyond just the most harmful content, and towards things like depressing content, depressive quotes, things like that.

There, we found that we could use next-gen AI to [identify] things that may be sad or depressing — and not to prevent people from accessing them, because sometimes that’s OK, sometimes a great sad song lets you have a cry and work through emotions. But, when it becomes all you see, and you get taken down a rabbit hole, it can become overwhelming.

People can explore some of these things, but we can also bring in more uplifting content. And, if it’s really moving down that path, we can make sure we’re providing emotional wellbeing support tools, or the ability for users to find professional help.

HM: You used the phrase “next-gen AI”. Is that different from generative AI? What do you mean by next-gen AI?

BR: These things get conflated a bit. The core technology is the same, and what gets a lot of attention is the generative part — where I can ask it to paint a picture for me, or I can have a conversation with it.

But you can also use that same technology [in other ways]. In the first phase of AI, so much of the value accrued to the [large language] model creators. I think, in the next phase, value is going to accrue to others, in the same way it did with cloud computing. Basically, cloud computing made it so that really complicated computing functions became building blocks that were available to anybody and, then, people could assemble those building blocks in different ways.

The first LLMs are going to be made available to everybody and, then, the next wave of value will be less about the model builder, and more about who has a unique signal to feed the AI. We have both our own LLMs that we have created, as well as LLMs that others have built — and, then, we are using signals that are proprietary to our platform, to create different outcomes: things like better relevancy for shopping recommendations, or more ability to spot depressing content.

HM: Is a Pinterest chatbot on the horizon?

BR: At its core, Pinterest is a visual search and discovery platform, and so we are much more focused on the visual nature of the interaction. Often, people lack the words to describe the thing that they’re looking for, right?

Think about the way you’d shop in the real world. If you walked into a boutique, and there was a good associate working there, would you say: “I’m looking for a dress in exactly this style, with exactly this aesthetic, and then I want a handbag with this aesthetic and shoes with this aesthetic?”

Or would you be more likely to say: “I’m looking for a great outfit for a holiday party, and I want it to feel like this”? [You would probably use] those general descriptors, and the visual nature of our platform helps people explore what they don’t have words for. Therefore, we don’t see a chatbot as the right modality.

HM: Looking beyond Pinterest at the social media space more generally, is AI going to make social media better or worse? In five years from now, where do you see us?

BR: As with any technology, AI can be used for good or for bad, and even more often it can lead to unintended consequences, if you aren’t thoughtful about it.

So you will see examples of all three of those things. You’ll see examples of AI used for good, and we are extremely focused in that category. And you will see, and are already seeing, AI used for nefarious purposes — all the more reason for tightening up trust and safety.

[More common still will be] people who have not been thoughtful and intentional in using AI, which leaves you exposed to unintended consequences. That’s been the story of social media for the last decade.

HM: I’d like to ask about one of your investors, Elliott Investment Management, with which you’ve had a long-term co-operation agreement since 2022, including a seat on the board. Pinterest may be a very wholesome platform, but Elliott is hardly famous for being soft and fluffy. How is it shaping your strategy?

BR: They’ve actually been a great partner. One of the things I talked about at the beginning is that I intend to prove that there’s a real business model built on positivity in social media, and that we can do well by doing good.

Think about electric vehicles. It’s not that long ago that most car companies were funding climate misinformation, or spoofing emissions tests. And, now, every car company is pushing their electric vehicles. What changed? It wasn’t a change in morality. It was, all of a sudden, the fact that electric vehicles are more profitable per unit than combustion engine vehicles. There’s a good business model in electric vehicles and, now, you have a whole industry moving in that direction. That’s what I hope can happen in social media.

We shared this with our investors. Our most recent user cohorts are our most engaged — in fact, by nearly twofold, which is almost unheard-of in product development. Normally, you start out with your power users and, over the years, each successive cohort’s a little less engaged.

So, for our broader investor community, we are showing that positivity for social media doesn’t have to just be charitable, it can be a good business model. And that can actually change an industry. I would love that to be the outcome of our work.

HM: I have one final question, just to pick up on that. I hear the pitch. I hear the mission, loud and clear. What’s working against you? What are the challenges going forward?

BR: The challenges are that it is a hypercompetitive space and there are plenty of people who’re still maximising view time, plenty of people who’re going to throw caution to the wind on AI, plenty of people who, when they see bad actors on their platform, are not going to sacrifice some growth to do the right things for users.

Those things can create tough competitive dynamics. But my hope is that the more we show there’s a real alternative, the more users and advertisers will make a more conscious choice. By no means can we say mission accomplished yet — but I think we have proven it’s possible. My hope is that we can get an industry rallied behind it, but that’s years and years of work ahead.

The above transcript has been edited for brevity and clarity