In the quest to uncover the fundamental particles and forces of nature, one of the critical challenges facing high-energy experiments at the Large Hadron Collider (LHC) is ensuring the quality of the vast amounts of data collected. To do this, data quality monitoring systems are in place for the various subdetectors of an experiment and they play an important role in checking the accuracy of the data.

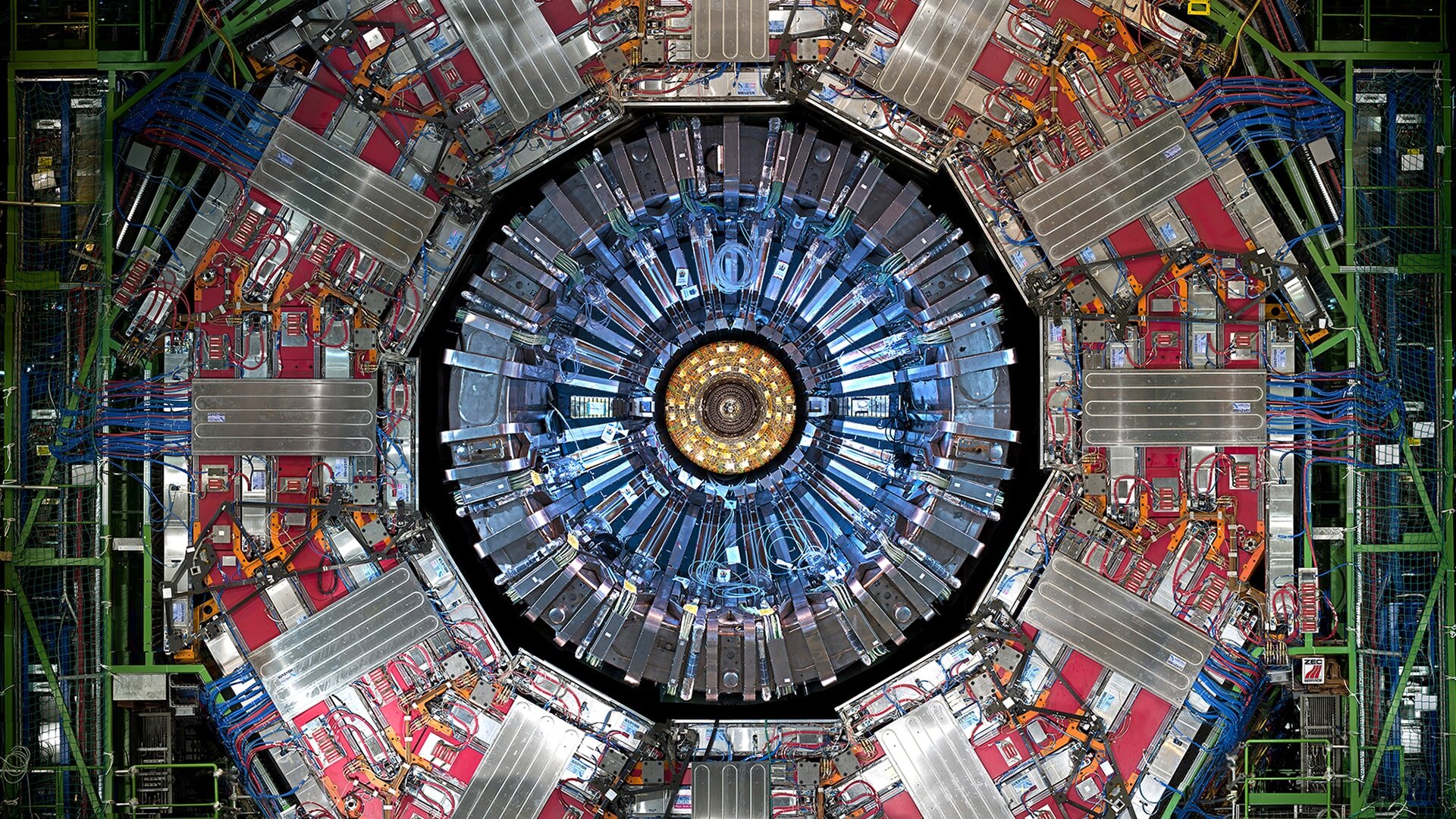

One such subdetector is the CMS electromagnetic calorimeter (ECAL), a crucial component of the CMS detector. The ECAL measures the energy of particles, mainly electrons and photons, produced in collisions at the LHC, allowing physicists to reconstruct particle decays. Ensuring the accuracy and reliability of data recorded in the ECAL is paramount for the successful operation of the experiment.

During Run 3 of the LHC, which is currently ongoing, CMS researchers have developed and deployed an innovative machine-learning technique to enhance the current data quality monitoring system of the ECAL. Detailed in a recent publication in the journal Computing and Software for Big Science, this new approach promises to make the detection of data anomalies more accurate and efficient.

Such real-time capability is essential in the fast-paced LHC environment for quick detection and correction of detector issues, which in turn improves the overall quality of the data. The new system was deployed in the barrel of the ECAL in 2022 and in the endcaps in 2023.

The traditional CMS data quality monitoring system consists of conventional software that relies on a combination of predefined rules, thresholds and manual inspections to alert the team in the control room to potential detector issues. This approach involves setting specific criteria for what constitutes normal data behavior and flagging deviations. While effective, these methods can potentially miss subtle or unexpected anomalies that don’t fit predefined patterns.

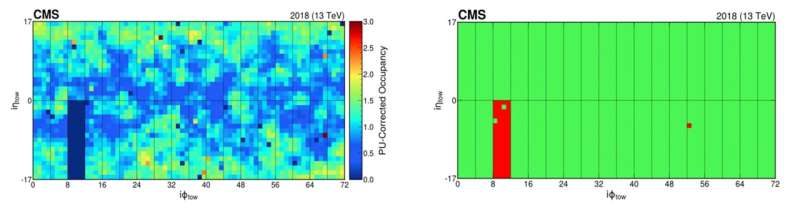

In contrast, the new machine-learning-based system is able to detect these anomalies, complementing the traditional data quality monitoring system. It is trained to recognize the normal detector behavior from existing good data and to detect any deviations. The cornerstone of this approach is an autoencoder-based anomaly detection system. Autoencoders, a specialized type of neural network, are designed for unsupervised learning tasks.

The system, fed with ECAL data in the form of 2D images, is also adept at spotting anomalies that evolve over time thanks to novel correction strategies. This aspect is crucial for recognizing patterns that may not be immediately apparent but develop gradually.

The novel autoencoder-based system not only boosts the performance of the CMS detector but also serves as a model for real-time anomaly detection across various fields, highlighting the transformative potential of artificial intelligence.

For example, industries that manage large-scale, high-speed data streams, such as the finance, cybersecurity and health care industries, could benefit from similar machine-learning-based systems for anomaly detection, enhancing their operational efficiency and reliability.

More information:

CMS collaboration, Autoencoder-Based Anomaly Detection System for Online Data Quality Monitoring of the CMS Electromagnetic Calorimeter, Computing and Software for Big Science (2024). DOI: 10.1007/s41781-024-00118-z

Citation:

CMS develops new AI algorithm to detect anomalies at the Large Hadron Collider (2024, November 13)

retrieved 13 November 2024

from https://phys.org/news/2024-11-cms-ai-algorithm-anomalies-large.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.